- While modular blockchain architectures are important in terms of providing an alternative to various needs, Celestia can offer innovative solutions in this field.

- Creating a solution that could provide Data Availability and Consensus functions for different application layers, Celestia is at a key point for the development of alternative architectures.

- As it secures data availability through sampling, it solves the problem of storage space in terms of scaling.

- Celestium can create a more secure alternative to off-chain data storage for Layer-2 solutions that could be built on top of Celestia and Ethereum.

- With the establishment of a Rollup-specific EVM-based settlement layer and its Recursive Rollup structure, Cevmos aims to provide an architecture that can be preferred for Layer-2 solutions.

As a result of the emergence of various Layer-1 blockchains and the creation of their respective ecosystems, today, we are talking about a multi-chain world more than ever. We have witnessed the formation of ecosystems attracting and establishing their own follower-base as each architecture offers different advantages to the users and fills in different gaps in the infamous triangle of scalability. In particular, new roadmaps covering architectural changes with layer two solutions such as Rollups and Subnets have begun to become the main topic of discussion. At this point, we believe it is essential to have a basic understanding on Monolithic and Modular Blockchain architectures.

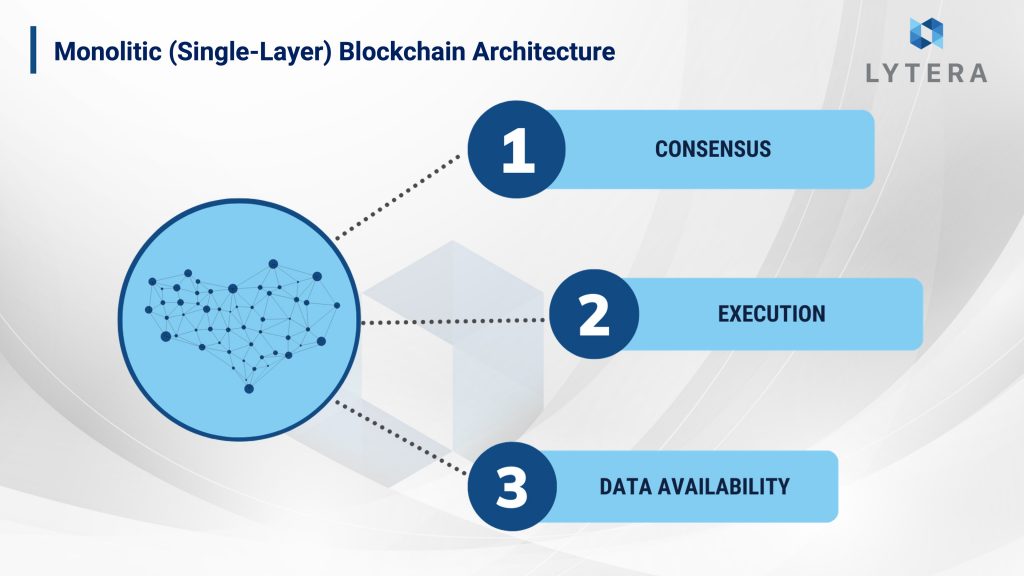

Monolithic Blockchain Architecture

Almost all the blockchains that we actively use have a monolithic architecture. Namely, Consensus, Data Availability and Execution all take place on the same layer and are controlled by the same node cluster.

Consensus: For blockchains, consensus is the methodology specifying how the verifiers or the miners would come to an agreement in terms of checking the validity of a transaction on the chain. Additionally, sorting the verified transactions, thus creating the blockchain history are also ensured as a result of consensus activities.

Execution: It is the procedure for computing how the state of the chain is changed by a transaction performed by a user and for changing the necessary parameters accordingly.

Data Availability: Data availability is one of the most important problems that blockchains have to solve on the path to scaling. Basically, a block involves the verification that the producer accurately shares all the data within the block (header + tx data) with other nodes.

It is possible to verify the data produced in a block by two different nodes: Full Node and Light Node. A Full Node can check the validity of data in the newly produced block after downloading all data on the blockchain. In order to achieve this, high hardware requirements may arise depending on the block size and the size of the total data of the chain. On the other hand, a Light Node can check whether a block is produced that is in line with the previous blocks by merely checking the header (block header) information instead of the entire block. However, it is a less reliable structure as it does not check all the data. The high requirements of full nodes and the inability of light nodes to provide sufficient security pose an obstacle for blockchains in terms of block size and verification performance.

At the end of the day, the ideal point to be reached regarding DA (Data Availability) is that Light Nodes should be sure that the block producer is sharing all the data with the network. In doing so, Light Nodes should not download all the data and a structure allowing Full Nodes to verify all the data is required. We will be elaborating on this subject later on throughout the report (see Data Availability Sampling)

Turning back to monolithic architecture, we can define these as architectures in which all the aforementioned three requirements are undertaken by a single blockchain infrastructure. EVM-supporting chains such as Ethereum, Polygon, and Avalanche C-Chain work exactly in this manner. Therefore, no matter how you define scaling, after a certain level, due to the intrinsic limitations of the network and the validators, improvements on speed, output capacity, storage requirement at the same time remain limited.

Modular Blockchain Architecture

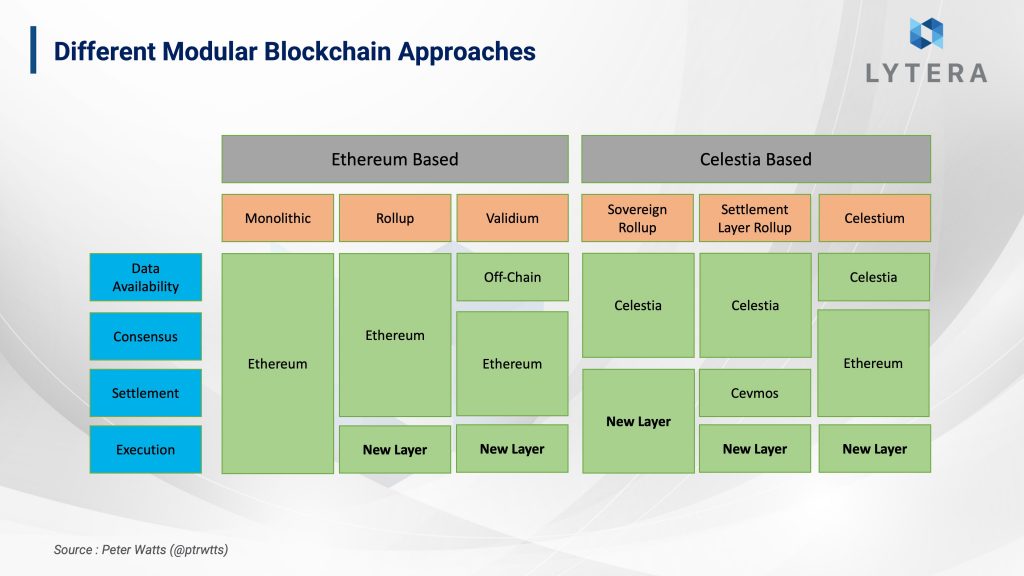

Instead of loading different functions into a single structure, building a structure enabling interoperability among different structures constitutes the basis for the modular architecture. In the blockchain world, it might be possible to talk about a Modular architecture by distributing the above-mentioned Consensus, Execution and Data Availability functions into multiple layers. A structure can be built allowing us to go beyond the intrinsic limitations of scaling with such a mechanism.

What advantages does modularity offer:

- Infinite Possibilities and Experiment Zone : Farklı Olasılıklar ve Deney Alanı

Katman çeşitliliğin artması sonucunda farklı kombinasyonlar ile birden fazla probleme çözüm getiren mimarilerin ortaya çıkarılması sağlanabilir. Buna ek olarak, belirli fonksiyonları farklı katmanlarda sabit bırakarak, yeni çözümler için yeni katmanlarda izole bir şekilde deneysel çalışmalar yapabilme esnekliği de uygun bir geliştirme ortamı sunacaktır.

Scalability:

Specialization of different layers to perform different core functions will result in a more efficient architecture. It is a significant advantage that the customization of a specific function based on a requirement of better speed or smaller storage does not affect another function.

Interoperability:

Interoperability of different blockchains can be enabled through a common layer by adding multiple layers on a layer offering a specific function based on a specific standard (e.g. Consensus or DA Layer). Ethereum offering a common space for validation for different L2 solutions in Rollup architecture can be shown as an example in terms of interoperability.

Flexibility and Fast Adaptation:

Thanks to modular structures, it is possible to offer new solutions by combining different layers similar to LEGO logic. When the function and the operation of standard to be undertaken by each layer is specified, it will be possible for these layers to offer different solutions in a more flexible way in addition to speeding up the process of integration.

Infinite Possibilities and Experiment Zone:

As a result of the increase in layer diversity, emergence of architectures providing solutions to multiple problems through different combinations might be possible. Additionally, by keeping certain functions on different layers, it can offer a development environment allowing the flexibility to conduct experiments in an isolated manner on new layers for novel solutions.

CELESTIA

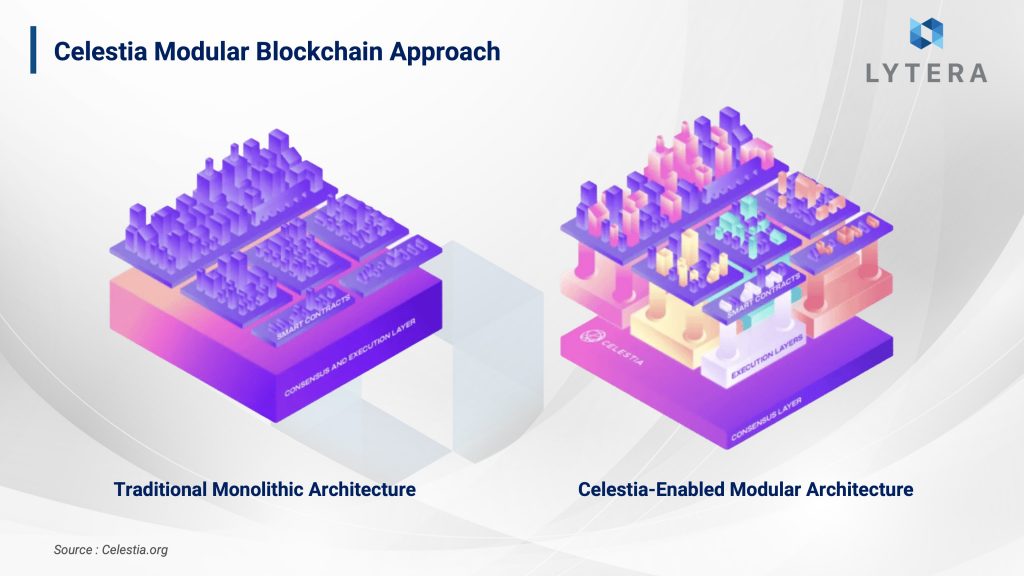

As we have already discussed modular and monolithic architectures, now we can proceed with the details of Celestia that offers a modular blockchain architecture.

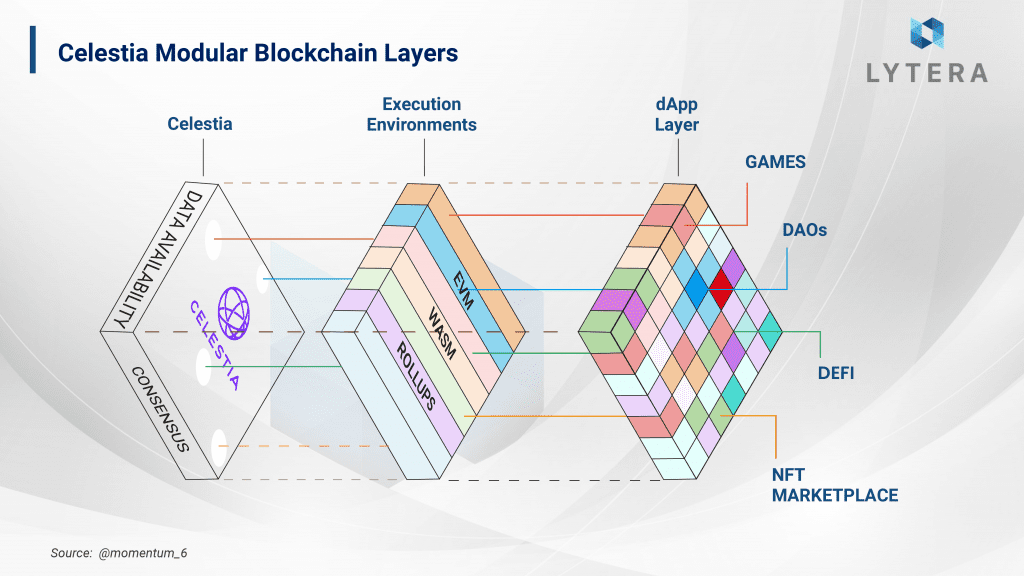

Celestia is a blockchain architecture designed to provide a basic layer that offers Consensus and DA solutions by separating Consensus, Execution and DA functions, which are essentials of a modular architecture. The main objective here is to create a scalable basic layer that can be integrated to different blockchain architectures.

As can be seen in the image, while Consensus and Data Availability problems are solved by Celestia, it is possible to develop different Execution Layers on top of this layer. Accordingly, Celestia provides a more functional architecture for the developers while serving as a settlement layer for different applications.

As the main function of Celestia is Consensus and DA, the burden on Full Nodes can be reduced. Because the execution layer is separate, the nodes are freed from the task of computing the changes in the state machine and changing the necessary parameters by executing the transactions in the produced blocks. At this point, it is sufficient for Celestia validators to reach a consensus on the order of transactions. In terms of data availability, validation of whether the relevant block data is shared with the network is again a function carried out by Celestia. Verifying whether the transactions are valid or not is not the responsibility of Celestia validators.

Data Availability Sampling (DAS)

We mentioned Data Availability in the earlier sections of this report. At this point, we believe it is necessary to mention how Celestia can ensure data availability while enabling a higher transaction volume and larger block sizes in its architecture.

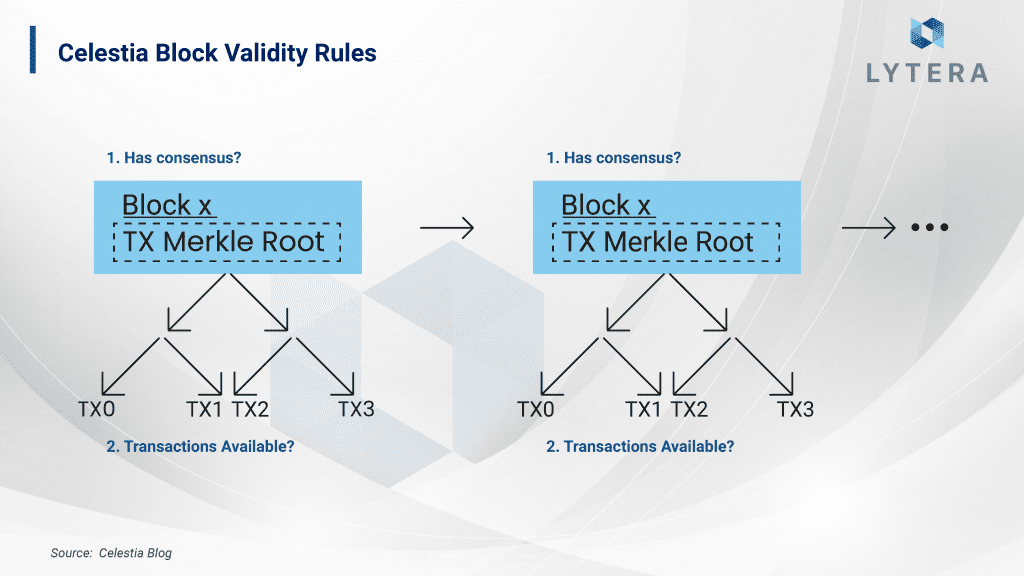

Blocks on a blockchain consist of two parts as header and transaction data (TX Merkle Root).

- Full Node : By saving and storing all the data on a blockchain, it can check whether all transaction data are shared with the network when a new block is produced.

- Light Node: By simply checking the block header, it assumes that the data is available and accessible on the network on the condition that other actors are honest.

Since the availability of data is provided through Full Nodes in such an arrangement, the increase in the number or the size of blocks will directly result in a higher hardware requirement. Celestia aims to solve this data availability problem by creating a proof based on a smaller size data. And the way to solve this problem is to somehow enable Light Nodes to have an active role in verifying the availability of data (without the need to download and store the entire blockchain data) in addition to eliminating the assumption that other validators are honest. Hence, it will be possible to validate with lower hardware requirements in addition to reinforcing decentralization by increasing the number of Light Nodes.

This is where “Data Availability Sampling” comes into play. Basically, it is a procedure describing the probabilistic validation that the big data is accurate and available by using a small part of a big data.

In order for this validation, it is sufficient to download and check random small parts of the big data. Using the “Erasure Coding” method, this small part can be processed in order to verify the big data. However, in order to achieve a verification with high probability, it is essential that more than one user undertakes the task of validation based on the (size of data to be downloaded/size of entire data) parameter.

It is possible to verify the validity and availability of the entire data with an accuracy over 99% as a result of sufficient repetition by taking random samples of 10 MBs from a file containing 1 GB of data. Here, when the block size is increased, this verification can still be carried out with samples of 10 MB. However, in order to achieve a verification percentage over 99%, it is imperative that more users carry out this verification process.

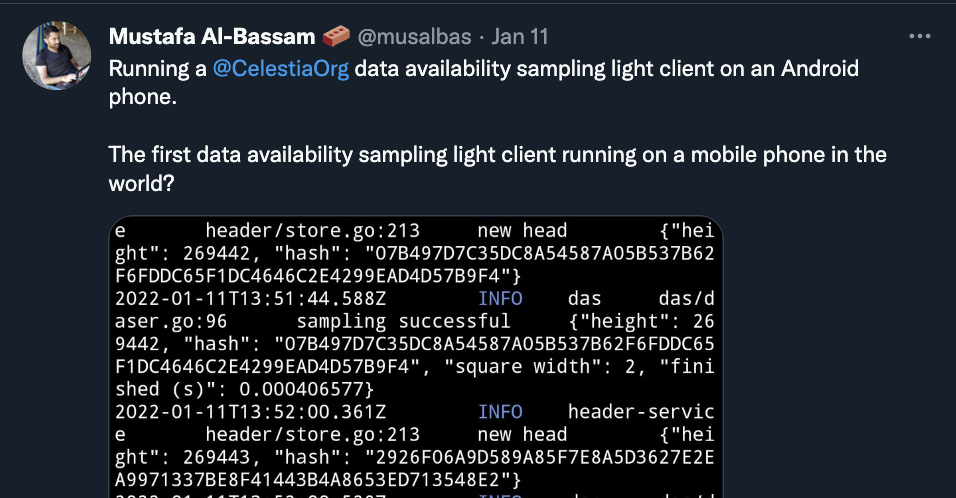

As long as the number of nodes is sufficient, the accuracy of data can be ensured with very small storage spaces and affordable computations. Here, Mustafa Al-Bassam’s, one of the founders of Celestia, tweet showing that DAS can be performed even on a mobile phone can be shown as a significant example:

Celestia-Enabled Modular Architecture

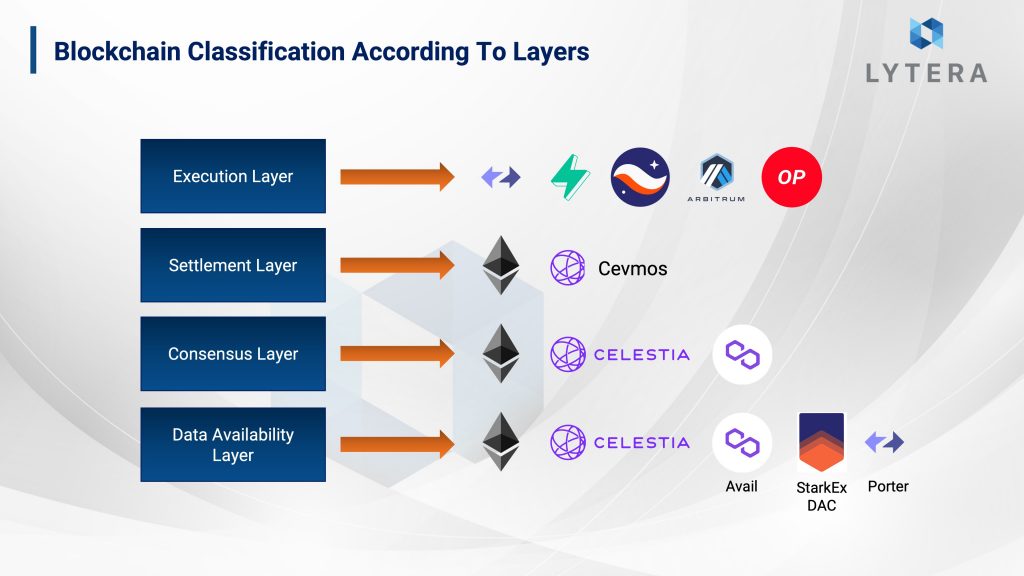

We have already discussed that Celestia offers a consensus and data availability infrastructure which can be utilized by multiple execution layers. For the application sides, there are multiple methods for how developers and projects can utilize Celestia.

1. Celestia Based Sovereign Rollups

Celestia is designed to enable the development of rollup execution layers which are independent in terms of their architecture and governance. These layers rely on Celestia in terms of sorting the transactions in accordance with the consensus and storing the data in the “Namespaces” divided into layers. However, these will also utilize their own execution mechanism for the verification of transactions and updating the state machine.

The layers to be built on top of Celestia can be constructed as EVM, WASM or Rollup execution layers, and will be independent of Celestia in terms of the processing capacity and the technical capabilities of the applications to be developed on them.

2. Celestium: Hybrid L2 Solution Using Ethereum and Celestia

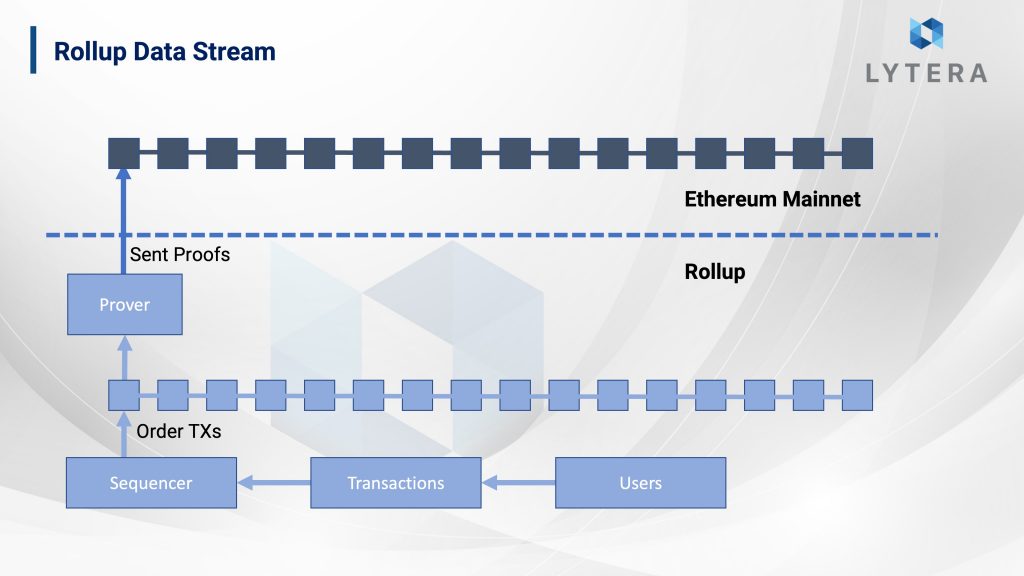

In order to have a better understanding about Celestium, we believe it is necessary to define the relationship between Ethereum and Rollup again.

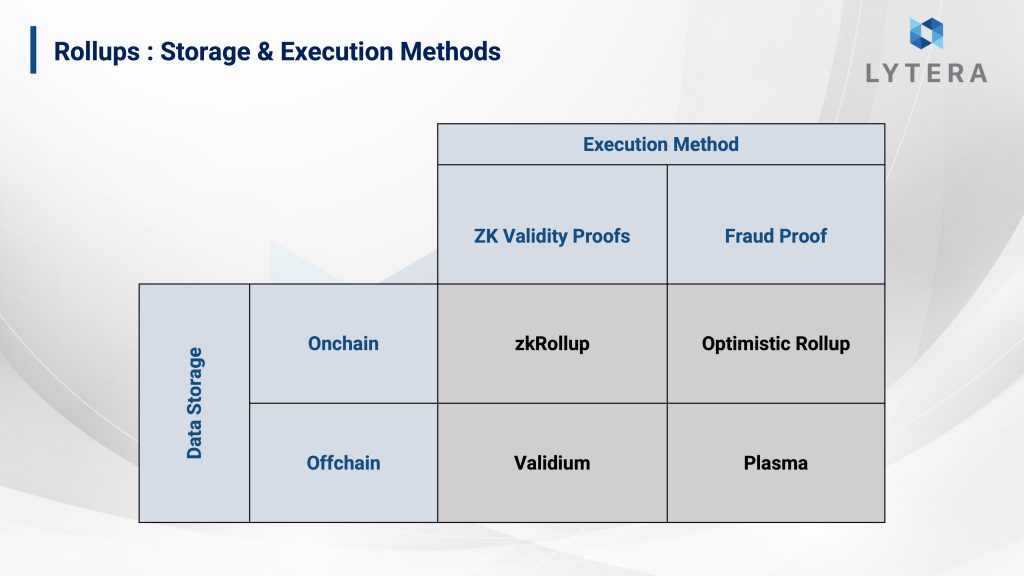

In a classic Rollup architecture, first the transactions are validated and implemented on the rollup, and then sent as a proof in a batch to the Ethereum network. Hence, Ethereum is used both as a consensus and data availability infrastructure. However, due to the high cost of scaling Ethereum, we know that some off-chain methods to especially ensure data availability have already been developed such as Validium.

In Validium architecture, while zero knowledge validation proofs are transferred to Ethereum, data availability and storage are ensured through an off-chain mechanism. Although the security of this data is ensured through a distributed data committee and distribution of authority, it is not a sufficient solution in terms of decentralization and security.

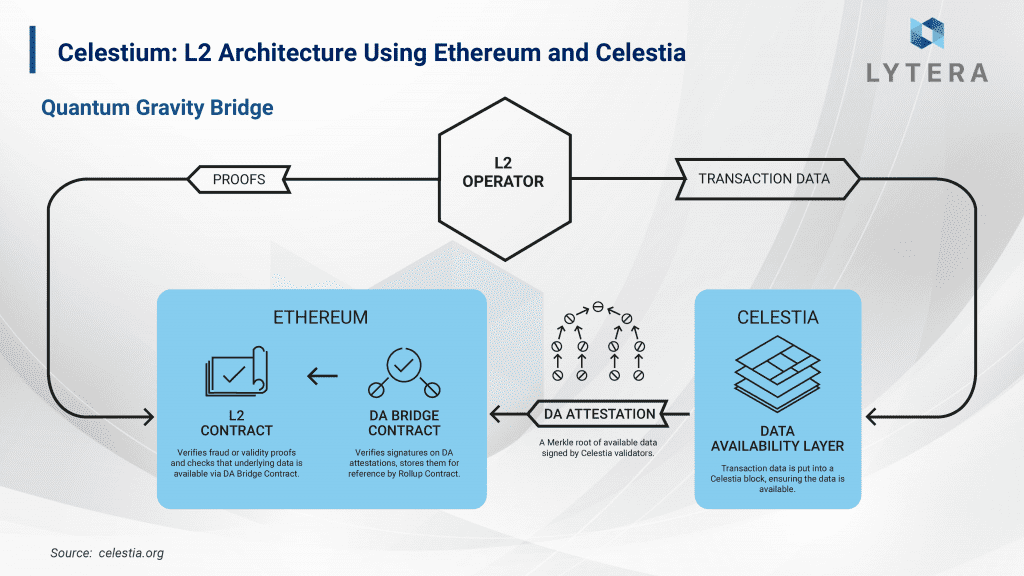

Celestium offers a new Layer-2 blockchain architecture solution. In this architecture, while Ethereum is used as the consensus layer, Celestia is positioned as the data availability layer. And Celestium (Layer-2) is positioned on top of Ethereum and Celestia as the execution layer.

Celestia aims to provide data validation service through the Quantum Gravity Bridge (Data Availability Bridge). At this point, the bridge will be located on Ethereum network, and transactions transferred to Celestia by the Layer-2 Operator will be sorted by Celestia validators and turned into a block. Afterward, data availability attestation will be signed by Celestia validators and forwarded to Ethereum.

While this mechanism allows data to be stored in Celestia and transferring the proof (attestation) to Ethereum, it might be offered as a relatively more expensive service compared to solutions such as Validium where data is stored off-chain. However, considering the security provided, it can be used by Layer-2 solutions as a reasonable alternative depending on the transaction fees on Celestia.

3. Cevmos : Celestia + Evmos + Cosmos

Cevmos can be defined as an EVM-supporting modular blockchain architecture. This architecture, basically created in cooperation with Celestia, Evmos and Cosmos, was created as a custom settlement layer for rollups.

While this will enable transfers with trust-minimized bridges between the Settlement Layer and Rollups, disputes will be resolved by the settlement layer as well. It is possible to make a parallel between zkRollups and Ethereum to describe the concept here. However, unlike Ethereum, this chain is designed to be entirely utilized by Rollups.

In the proposed architecture, an Evmos-based chain (with EVM and Cosmos SDK support) will be designed as a Celestia rollup, and will use Optimistic Tendermint (Optimint) instead of classic Tendermint. Evmos-based Celestia Rollup chain will be connected to Evmos Hub via IBC, and $EVMOS will function as the gas token of the chain.

As the Evmos-based chain is positioned as a rollup of Celestia and rollups will also be built on top of the Evmos-based chain, it is defined as a Recursive Rollup.

CONCLUSION

Celestia demonstrates a remarkable approach as it offers a modular architecture and optional solutions suitable for different use cases. The fact that the team consists of figures such as Mustafa Al-Bassam, John Adler and Issmail Khoffi is one of the factors that increase expectations from the project.

It is possible to interpret the implications of Celestia as rather significant because it offers a potential that can remove an important limitation in terms of the scaling of many monolithic chains such as Ethereum thanks to its sampling solution on data availability. Additionally, Celestium provides an alternative for the data layer of rollups as it adapts itself to Ethereum roadmap. Also, Celestia may be in an advantageous position in terms of product-market harmony as Cevmos architecture is designed as a custom settlement layer for rollups.

Celestia, the final testnet of which has already been carried out, is expected to launch its mainnet in 2023. Therefore, it is highly likely that 2023 will be a year when we will be able to observe concrete developments whether modular architectures will be able to realize the expected improvements and for us to see how these architectures will be positioned in the entire ecosystem.